The study of numerical methods and machine algorithms for systems modeling and computations exhibits widely applicable themes reaching far and beyond delivering outputs to calculator operations. Such themes and concepts are made explicit in Numerical Analysis (Math 128A) at UC Berkeley, which I had the pleasure of taking under the instruction of Professor John Strain.

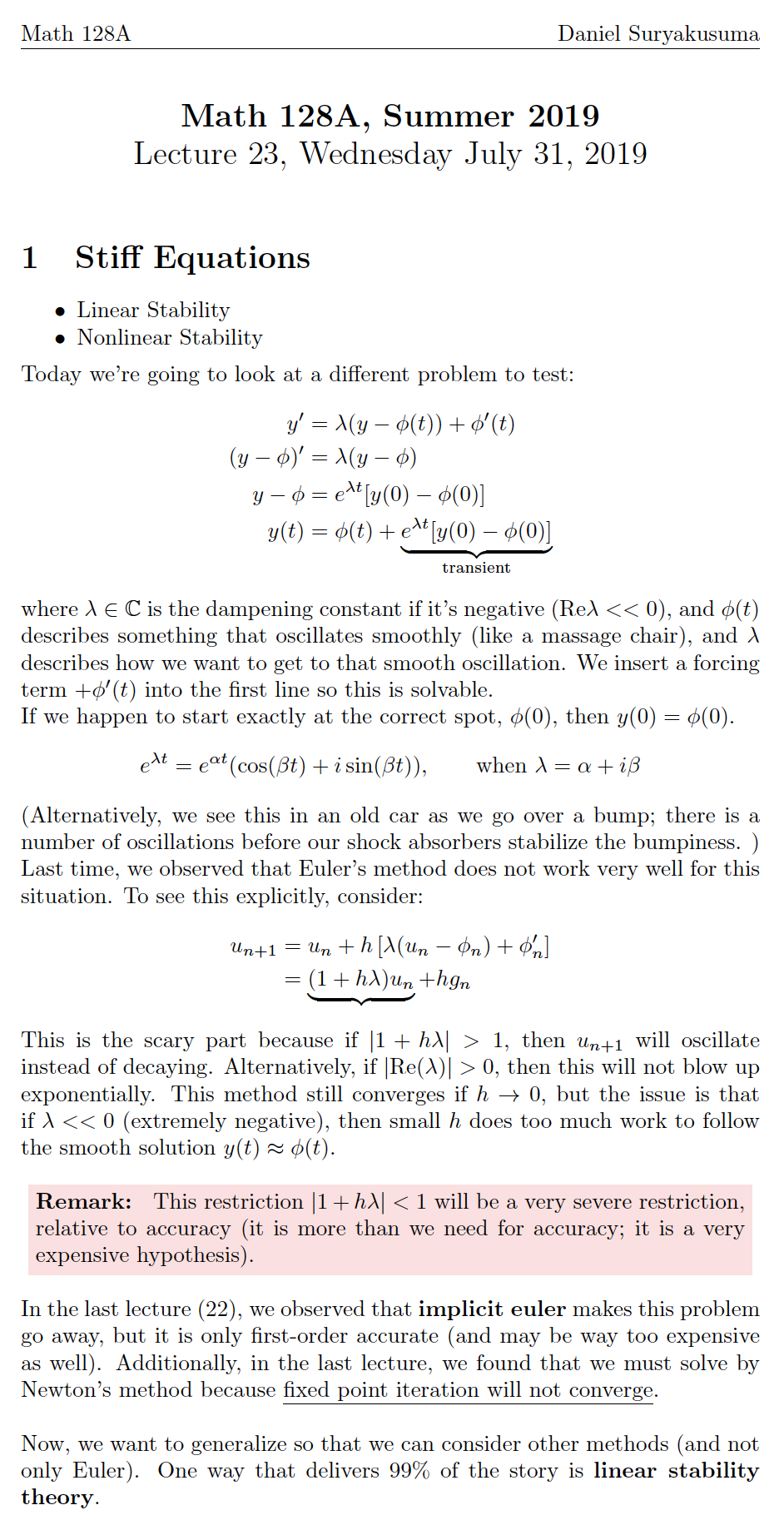

Because lectures by Strain are orthogonal (but complementary) to many ideas in three textbooks used for the course and their presentation thereof, I took extensive notes on material covered in lecture. I hope my compiled lecture notes are of some help to others interested in the subject or even its broader implications. A sample is shown below:

Present throughout this course is the theme of taking an initial guess to a complicated problem and constructing an iteration that brings us closer to (reducing error from) a correct solution. The applicability of this concept is direct and immediate in the sense that in solving our everyday problems, we often simply take the current best course of action. Evaluating results, fine-tuning our solution can yield better results.

In numerical analysis, we treat this concept precisely. For example, to numerically compute a root of a function, we can construct a fixed point iteration and reduce error at each step. With additional conditions satisfied (a la Newton), we even have (higher orders of) rapid convergence to the desired solution. For a system modeled by differential equations, discretization and construction of a linear system can reduce the problem particularly into matrix system. In performing matrix machine operations, iterative refinement can yield truly powerful results.

Even in real-life problems, where there may be a number of ways to solve a problem, there are usually conditions for when one solution performs more favorably over another. A student of mathematics interested in optimization may want to classify these families of solutions and apply metrics to determine conditions for optimality of a given solution.

This is no different in quantitative problems, where such measures typically incorporate precision of accuracy and computation cost. For instance, having a desired solution to more digits of accuracy may be the difference between a failed project and one that is successful.

Suppose a plane experiences sudden complications in the middle of attempting to land. In a dire situation like this, it may be tempting to say this problem is entirely now in the hands of the pilot (surely this is what pilot training is for)! However, this dismisses entire teams of players involved in this real-life problem, starting with the engineers, systems designers, with the pilot as the absolute last in line. An engineer designing the computer systems for the plane must a priori consider these scenarios, pre-determining constraints that ensure the delivery of automatic computations with various real-time variables within a few seconds of computation, to some 'good enough' precision of accuracy to ensure safety.

In complex business problems, analysts might run in circles comparing projections and results in hopes of driving a critical business decision. However, it may be that a decision is required by the end of the week. In situations like these, error (and risk) analyses is imperative to understanding potential limitations of computed results. Placing bounds to understand worst-case scenarios combined with a probabilistic treatment is perhaps the most optimal approach to complex situations like these (and may accentuate the importance of an actuary to access business risk). Regardless, as business decisions of today are almost always data-driven, it is imperative for the data analyst and actuary to develop an understanding of numerical methods and be aware of strengths and limitations of particular machine calculations and algorithms.

== Math 128A, UC Berkeley ==

Lecture notes for the course are made available by lecture topic below, as well as compiled into a single document as above.

Lecture Notes:

1 - Review of Calculus, Computer Arithmetic

2 - IEEE Floating Point (Double Precision) Numbers, Backwards and Forwards Analysis

3 - Algorithms, Big-O, Convergence and Characterizing Error

4 - Iterations: Bisection in Machine Arithmetic

5 - Fixed Point Iterations, Mean Value Theorem and Error Bounds

6 - Optimization of Fixed Point Iterations in Floating Point

7 - Newton's Method, Quadratic Convergence, Dimensional Analysis

8 - no lecture, july 4 independence day holiday

9 - Polynomial Interpolation: Newton, Lagrange, Interpolation Error

10 - Runge Phenomenon, Chebyshev Points

11 - Newton Basis (Divided Differences), Hermite Interpolation

12 - Numerical (Discretized) Differentiation

13 - Quadrature (Numerical Integration) Rules, Euler-Maclaurin: Endpoint-Corrected Trapezoidal Rule (ECTR)

14 - Gaussian Integration, Legendre Polynomials (Discrete Infinite Sums)

15 - midterm, no lecture

16 - Adaptive Quadrature

17 - Integration Weights, Ordinary Differential Equations (ODEs)

18 - ODEs: Fixed Point Iteration (of Functions), Backwards Euler's Method, Local Truncation Error

19 - Runge-Kutta Methods, Conditions for Order of Accuracy

20 - Butcher Arrays, (Iterated) Deferred Correction for Fully Implicit Runge-Kutta Methods

21 - Autonomization, Multi-step Methods, Adams-Bashforth, Adams-Moulton

22 - Method Stability, Convergence, Predictor-Corrector, Stiff Equations

23 - Region of Absolute Stability, Linear Stability (A-stable, L-stable)

24 - Nonlinear Stability (B-stable), Algebraic Stability, Hammer-Hollingsworth

25 - Linear Algebra, Gaussian Elimination

26 - Algorithms: Partial Pivoting, Special Matrices: Diagonally Dominant, Symmetric Positive Definite

27 - Cholesky Factorization, Causality, Residual

28 - Floating Point Error: Cholesky Factorization, Sensitivity to Perturbations

29 - Backwards Error Analysis, Operator Norms, Frobenius Norm, Absolute Norms, Condition Numbers

30 - Iterative Refinement (Improvement), Review: Quadrature, Implicit Variable-Stepsize Adams Method, Cholesky Factorization, Hermite Interpolation

31 - Review: LU, Diagonally Dominant Matrices, Residuals, Symmetric Arrowhead Matrices, Integration Weights for an Exponential Basis, Linear Stability

32 - final exam, no lecture